Context-Based Management – Metrics and The Dangers

“You Cannot Measure It, You Cannot Manage It” – It’s a favorite quote of many managers. At my side, I love to measure everything in my project. But how to translate the message from the result of this measurement into quality factors totally depends on the context. It’s very important to understand how to use the metrics in context-driven testing

From Cambridge Dictionary: “Metrics are a set of numbers that give information about a particular process or activity”. For examples: In Operation, we use MTBF – Mean Time Between Failures to measure elapse time between failures which occurs in a system or a service. In Conventional Testing, someone use “Test Case Productivity” which gives the test case writing productivity based on which one can have a conclusive remark; “Defect Acceptance” to determine the number of valid defects that testing team has identified during execution. In Agile Testing, “Examined Coverage” is a metric used to identify how much the software explored in scope or “Effort Density” identifies how much the effort runs into the software.

While metric brings many benefits such as:

- Easy to conversate on a given problem

- Provides clues of problems

- Standard Metrics convey useful messages about objects being measured.

However, if the metric is not put into appropriate context, it’s really dangerous

A true Story: In a project that I have had oversight, the Project Manager reported to his upper management team about ratio of quarterly defect escaped vs number of defects identified by the team. The number was very impressive – it’s about 0.05 (or 0.07 or something like that). The Project Director immediately translated this number into a message about a good quality of testing and quality of product. Almost everyone is pleased with this nice number. However, it’s a misleading information about quality of product. 0.05 should be understood that “we have hit the target of less than 1% (here is 0.05) for defect escaped with areas that our clients/ users worked with in X time period”. We never know what is being hidden in the software, how serious they are, what their impacts.

Bad Aspects of Metrics

A metric to measure and compare elements that are not consistent. In Testing, some organizations ask their managers to keep track all data of No. bugs, No. test cases generated/ executed and decide testing productivity. But, these are inconsistent units. How to decide if Test Case A is greater than Test Case B, or all test cases are equal?

Use metric to evaluate team & individual performance or create competition in the team. It’s very dangerous to do this. As its consequence, the team will mark up their numbers to prove their performance instead of using them to look into the facts. A team has reported 12 test cases executed per day (10 was client’s goal) as the results of breaking up long test cases into smaller ones.

With misleading info, higher managers will catch false senses about the product status. For example: 90% test coverage means almost tests have been done, but they (higher managers) don’t know that this 10% were major test cases. In general, the larger numbers reported, the better the testing understood by higher managers. The problem is that they were given the context which associates with these numbers. As above example, if we provide more info of context like most of main flows have NOT been executed due to constraints in initiating the data, 10% will reflect the negative fact.

Metric-Based Testing – A Circumvent Game or A Methodology: I have heard of this term used by some managers who are very experienced in Software Testing. Using this term, they are implying how to improve and predict the testing and quality management with “reliable metrics” and align them with given goals. The beauty of nice dashboard which made of metrics is hiding risks. If we are too conservative in believing the metrics as magical tool to help the testing better, we will create circumvent games and side effect in the team. Instead of taking time to improve the product quality, the team waste time to prove themselves, to improve the numbers.

A Practice to Use Metrics

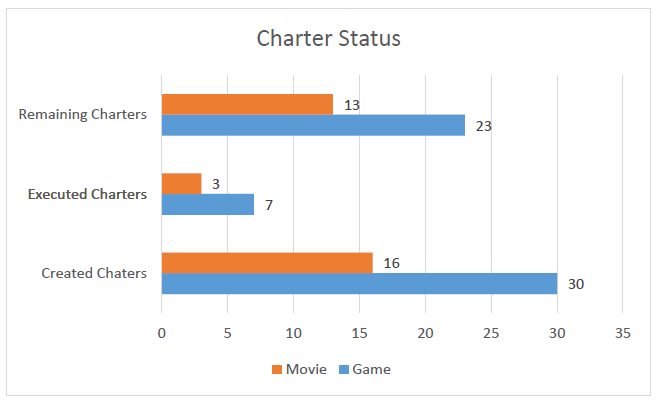

At MeU Solutions, we train our people to use metric to reveal negative aspects instead of saying good things. Following numbers tracked as the progress of testing from a project at MeU Solutions. The context is that the project run into last days of a release, these numbers temporarily say that the team is behind the schedule. A root cause analysis needs to be taken. I said “temporarily” is because these numbers at this moment are just the clues of potential problems. If all remaining charters are minor ones and can be executed within few days, it will not be a problem.

Sitting in retrospective sessions with my scrum teams, I always ask them what context made up these numbers. The absence of the context in a report makes metric data meaningless. The numbers are subjects to manipulate and present an incomplete view that it can be hard to tell whether the product or the project is in a good shape. If you have numbers represents defect trends that they indicate that number of defects higher over time, it doesn’t mean your test team has been doing well, it may be problems from your development team.

Metric like heuristic in management. Its value depends on the context. To use the metric appropriately, we will need a great care and good skills.